The tech world is abuzz with excitement as whispers of groundbreaking developments in AI quality and speed circulate rapidly. Rumors suggest GPT-5 may debut within just two weeks, accompanied by leaked internal test insights that hint at remarkable capabilities. Simultaneously occurring,signs point to GPT-6 already being in the training phase,pushing the boundaries of innovation. Amid thes developments, intriguing reports emerge of an “Ultraman”-themed project utilizing millions of GPUs, signaling a new era of AI-powered entertainment. As anticipation builds, we explore the latest leaks and what they might mean for the future of artificial intelligence.

Anticipating the Arrival of GPT-5 and Its Potential Impact on AI Development

As news leak out about GPT-5’s imminent release within just two weeks, anticipation is reaching a fever pitch among AI enthusiasts and industry insiders. Early internal tests suggest groundbreaking improvements in contextual understanding and problem-solving capabilities, hinting at a new era for conversational AI. Excitement also surrounds the possibility of more robust safety features and better alignment with human intentions, perhaps bridging the gap between artificial and human intelligence more seamlessly than ever before.

Meanwhile, whispers about GPT-6 have already begun circulating, with some reports claiming the model is in the initial stages of training — leveraging millions of GPUs during an ultra-optimized training cycle often dubbed ”Ultraman.” Such massive computational resources might enable GPT-6 to understand complex reasoning tasks and generate highly nuanced outputs. The rapid progression hints at an arms race in model size and sophistication, which could reshape AI development strategies globally.Below is a brief overview of the possible timeline:

| Event | Expected Date | Meaning |

|---|---|---|

| GPT-5 Release | In 2 weeks | Next-gen interface & safety enhancements |

| GPT-6 Training Starts | Month 1 | Massive GPU deployment & cutting-edge architecture |

| Public Alpha of GPT-6 | Q3 2024 | High-level reasoning & contextual dialog |

Unveiling the Latest Internal Leaks and What They mean for Stakeholders

Recent internal leaks have sent ripples through the tech community, hinting at groundbreaking advancements on the horizon. Rumors suggest that GPT-5 might be debuting within two weeks, accompanied by a flurry of beta testing insights that hint at unprecedented capabilities. These leaks reveal a focus on enhanced contextual understanding, faster processing times, and more natural interactions, signaling a potential leap forward for AI usability. Stakeholders, from developers to enterprise clients, are closely monitoring these signals to gauge the possible impact on product pipelines and competitive positioning.

Meanwhile, whispers about GPT-6 are gaining traction, with sources indicating that training on a colossal scale has already begun—possibly involving over one million GPUs dedicated solely to refining its architecture. An intriguing snippet suggests that Ultraman-themed training datasets might be part of the process, designed to push multi-modal learning boundaries. Stakeholders should consider the implications of such massive resource commitment: not only does it emphasize the tech giant’s aggressive innovation strategy, but it also raises questions about cost, scalability, and future-proofing in an increasingly competitive landscape.

| Leaked Info | Potential Impact | Stakeholder Focus |

|---|---|---|

| GPT-5 Launch in 2 Weeks | Market Dynamics Shift | R&D Adjustment |

| GPT-6 Training Begins | Competitive Edge Enhancement | Resource Allocation |

Exploring the Progress of GPT-6 Training and Its Implications for Future innovation

Recent whispers within the AI development community suggest that the groundwork for GPT-6 has already begun behind closed doors, with preliminary training phases reportedly underway. These early stages involve an elaborate orchestration of hundreds of thousands of GPUs,akin to the colossal effort seen in blockbuster visual effects for a Hollywood blockbuster. The scale and ambition hint at future models capable of unprecedented contextual understanding and problem-solving prowess, promising to redefine the boundaries of human-AI interaction.

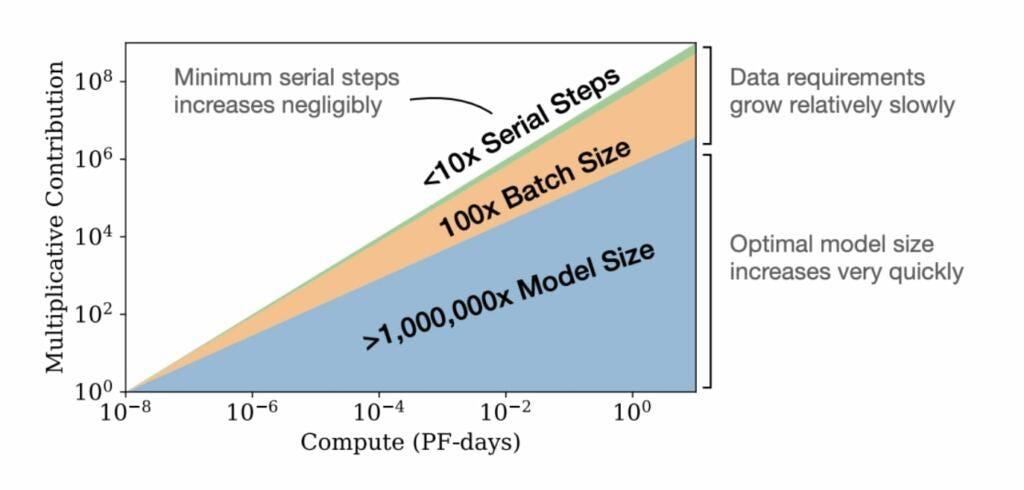

Graphs and performance metrics emerging from the leak indicate rapid advancements in training efficiency, with some experts suggesting that training time may be halved compared to GPT-5’s development cycle. Implications are vast: industries from healthcare to entertainment could soon experience transformative shifts. Key developments include:

- Enhanced language comprehension and creative output

- Improved real-time decision making

- Integration with cutting-edge robotics and augmented reality

Below is a simplified overview of the estimated resource allocation for GPT-6 training:

| Resource | Quantity | Purpose |

|---|---|---|

| GPU Clusters | 百万级 | Massive parallel processing |

| Data Sets | Petabytes | Training and fine-tuning |

Analyzing the Massive GPU Resources Behind Ultraman Spoilers and industry Trends

Behind the scenes of cutting-edge AI development, the dramatic surge in GPU utilization paints a vivid picture of the industry’s relentless pursuit of innovation. Companies are deploying hundreds of thousands of GPU cores to accelerate training cycles for models like GPT-5 and GPT-6, pushing the boundaries of what’s computationally feasible. Such massive-scale infrastructure not only demands advanced cooling solutions and power management systems but also signals a new era where AI research becomes a symphony of hardware orchestration and software mastery. The hardware explosion is evident in the recent leaks suggesting that GPT-6 might have already been amid extensive training stages, requiring approximate allocations of a million GPUs just to manage the workload.

| GPU Type | Units Deployed | Purpose |

|---|---|---|

| NVIDIA A100 | 500,000+ | Model training & testing |

| TPU V4 | 200,000 | Data center acceleration |

| Custom GPU Clusters | ~300,000 | Reinforcement learning & large-scale simulations |

The scale and sophistication of these GPU deployments highlight a clear industry trend: exponential growth in computational resources is necessary to achieve breakthroughs in AI technology. As ultraman spoilers leak and industry rumors swirl about upcoming models, the stark reality remains—unprecedented hardware investments are fueling the next wave of industry giants, shaping the future of entertainment, AI, and beyond.

Final Thoughts

As the AI landscape accelerates at an unprecedented pace, whispers of GPT-5’s imminent release and the tantalizing hints of GPT-6’s early training offer a glimpse into a future reshaped by innovation. Meanwhile, the surging power behind Ultraman’s storytelling—propelled by millions of gpus—reminds us that the boundaries between technology and entertainment continue to blur. As we stand on the cusp of these breakthroughs, one thing remains clear: the next chapter in AI and digital entertainment promises to be more dynamic and captivating than ever before. Stay tuned, as the future unfolds with each new revelation.