As the world eagerly gazes toward 2025, the anticipated WAIC (World Artificial Intelligence Conference) stands as a pivotal moment in the journey of artificial intelligence. With AI advancing at an unprecedented pace, the conversation no longer centers solely on technological breakthroughs but increasingly on the ethical and societal implications of this digital evolution. Amidst the promise of innovation, a essential challenge emerges: how can humanity guide AI’s growth without allowing it to devolve into a “final villain”—a force that undermines human values and safety? As we stand on the cusp of this new era, exploring the delicate balance between progress and prudence becomes crucial in shaping a future where AI remains a trusted partner rather than a looming threat.

Navigating the Path of AI Growth: Opportunities and Challenges for WAIC 2025

As we look toward WAIC 2025, the unstoppable momentum of AI growth presents a dual-edged sword. On one side, innovations promise unprecedented efficiencies, breakthroughs in healthcare, smarter cities, and personalized experiences that coudl transform our daily lives.However, this rapid evolution also prompts critical questions: How do we prevent AI from becoming a “final boss” in ethical dilemmas or societal destabilization? Balancing technological enthusiasm with careful stewardship is essential to ensure AI acts as a partner rather than a peril.

To navigate this dynamic landscape, stakeholders—developers, policymakers, and communities—must collaborate on clear frameworks emphasizing transparency, accountability, and human-centered values.consider the following key focus areas:

- Ethical AI governance

- Bias mitigation

- Public engagement and awareness

- Innovation with obligation

| Focus Area | Goal |

|---|---|

| Ethical AI governance | Establish international standards for responsible AI |

| bias mitigation | Ensure fairness and prevent discrimination in AI systems |

Cultivating Responsible AI Development: Strategies for Human-Centric Innovation

Ensuring that AI advances align with human values requires deliberate strategies that prioritize ethical considerations from the outset. Implementing transparent development processes, involving diverse stakeholders, and establishing clear guidelines for responsible innovation are crucial steps toward fostering trust. As AI systems become more integrated into daily life, cultivating a culture of accountability and continuous oversight helps prevent unintended harms and ensures technology serves the broader good.

To concretize these ideals, some recommended approaches include:

- Regular Ethical Audits: Conduct assessments throughout development to identify potential biases or risks.

- Inclusive Design Teams: Bring together experts from different backgrounds to broaden perspectives.

- Public Engagement: Foster dialog with communities to understand societal expectations and concerns.

| Strategy | Outcome |

|---|---|

| Transparent Algorithms | enhanced trust and understandability |

| Inclusive Teams | Diverse insights reducing biases |

| Continuous Oversight | Minimized risks over time |

Fostering Global Collaboration to Shape a positive AI Future

Building a future where Artificial intelligence serves as a force for good demands robust international cooperation. Countries, organizations, and researchers must come together to establish shared principles, ethical standards, and transparent frameworks that guide AI development. Embracing diverse perspectives ensures that AI innovations are aligned with global values, preventing isolated pursuits that may lead to unintended consequences. The key lies in fostering open dialogue and collaborative research initiatives that transcend borders, enabling us to anticipate challenges and harness AI’s potential responsibly.

To effectively shape this positive trajectory, it is crucial to implement inclusive governance models and prioritize cross-cultural understanding. Initiatives such as:

- Global AI ethics consortia

- International AI research alliances

- Shared safety protocols

must become standard practice. By doing so, the collective human effort can steer AI from being perceived as a looming threat to becoming a transformative tool that benefits all, fostering trust and ensuring responsible growth in the AI landscape.

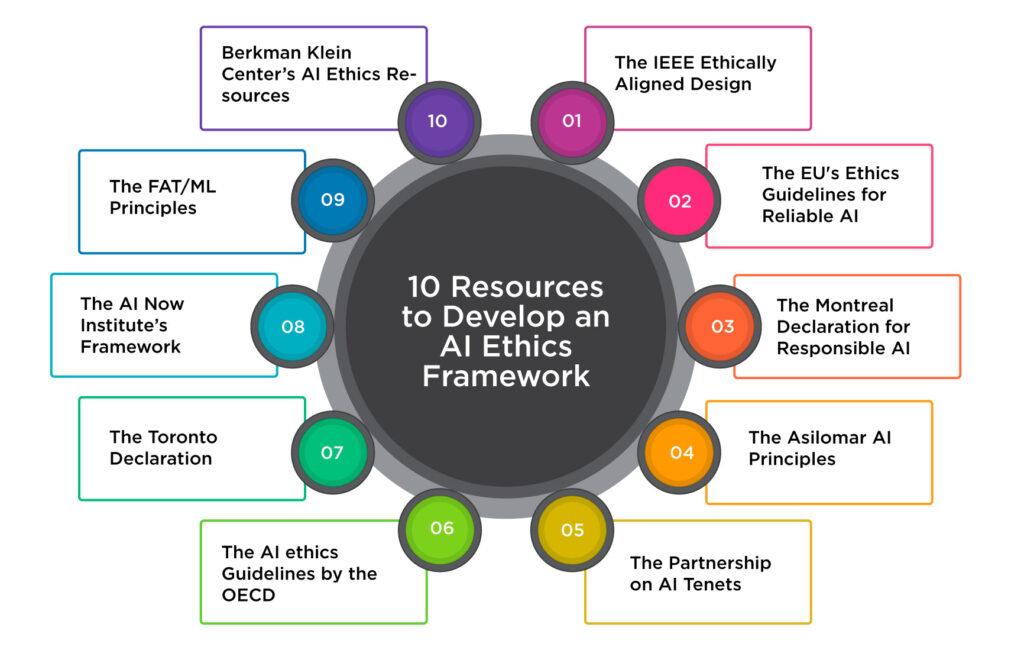

Implementing Ethical Frameworks and Policy Measures to Prevent AI Misuse

Embedding ethical principles into AI development requires more than just guidelines; it demands the creation of dynamic governance frameworks that adapt to the rapidly evolving landscape. Multistakeholder collaboration—involving governments, technologists, ethicists, and civil society—must be prioritized to establish universally accepted policies that prevent misuse. Regular audits, transparent reporting mechanisms, and accountability measures serve as the backbone for ensuring AI remains a force for good rather than a tool for harm.

To effectively deter malicious applications, organizations need to implement concrete policy measures such as:

- Robust access controls limiting who can deploy powerful AI models

- Stronger enforcement of usage policies backed by technological safeguards

- Continuous monitoring and risk assessment to detect and respond to misuse early

| Measure | Impact |

|---|---|

| AI Usage Auditing | Promotes transparency and accountability. |

| Access Restrictions | Limits potential for malicious deployment. |

| Continuous Policy Review | Ensures adaptability in face of emerging risks. |

Sponsor

As AI’s capabilities expand, establishing robust ethical frameworks becomes crucial to mitigate potential misuse. This involves creating guidelines that promote responsible AI development and deployment, emphasizing fairness, transparency, and accountability.Policy measures should focus on:

Data Governance: Implementing stringent data collection and usage policies to prevent bias and ensure privacy.

Algorithm Auditing: Regularly auditing AI algorithms for bias and unintended consequences.

Accountability Mechanisms: Defining clear lines of responsibility for AI-driven decisions.

International Collaboration: Fostering cooperation among nations to develop globally consistent AI ethics standards.

Effective implementation necessitates a multi-faceted approach involving governments, industry, and academia.Consider,for instance,the impact of AI in content creation. Tools like JustDone, with it’s AI Plagiarism Checker and Text Humanizer, can aid in ensuring originality and ethical writing. Let’s examine how different sectors might approach AI ethics.

Sector

Ethical Focus

Example

Healthcare

Patient Privacy

Anonymized Data

Finance

Fair Lending

Bias Detection

Education

Academic Integrity

JustDone’s Plagiarism Checker

Wrapping Up

As we look ahead to WAIC 2025, the horizon of artificial intelligence continues to expand—promising groundbreaking innovations while posing profound questions about our shared future.Navigating this path requires vigilance, collaboration, and a commitment to steering AI development toward benefits rather than pitfalls.Ultimately, the challenge lies not just in technological progress, but in fostering responsibility and foresight, ensuring that AI remains a partner in human progress rather than a paradoxical adversary. The journey is complex, but with mindful strides, we can shape an AI-enabled world that aligns with our values and aspirations.